We recently developed an incredible machine learning workstation. It was born out of necessity, when we were developing image recognition algorithms for cancer detection. The idea was so incredibly powerful, that we decided to market it as a product to help developers in implementing artificial intelligence tools. During this launch process, I came across an interesting article from Wired on Google’s foray into hardware optimization for AI called the Tensor processing unit (TPU) and the release of second generation TPU.

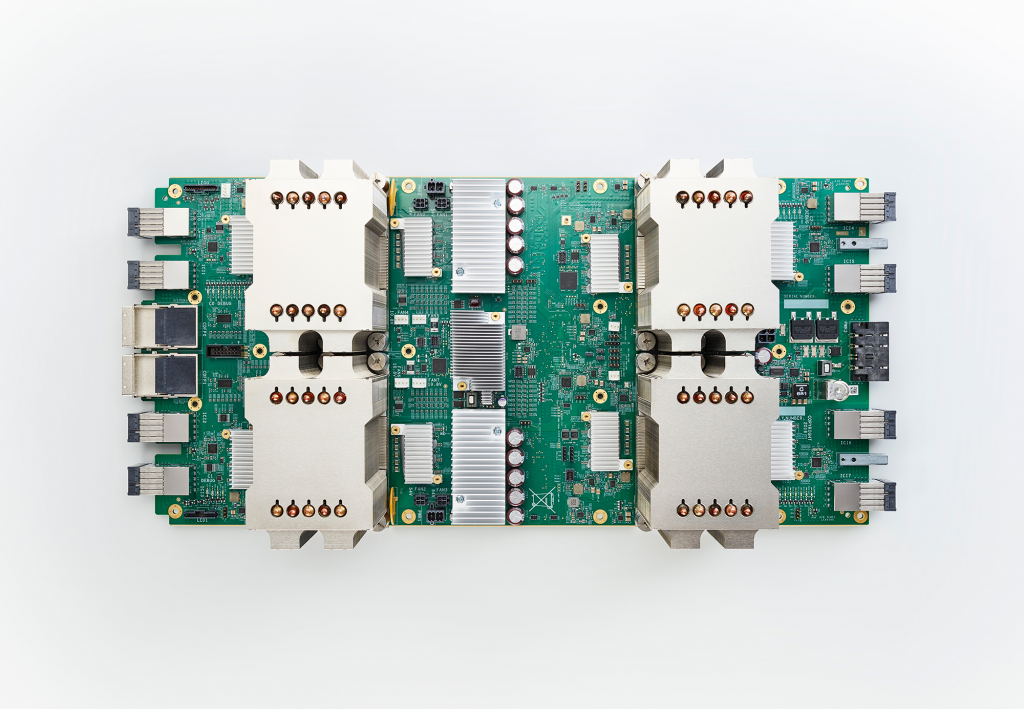

I became a little bit confused about the benchmark published by Wired. The TPU is an integer ops co-processor. But, Wired cites teraflops as a metric. In the article it is not clear what specific operations are they referring to? Whether it is tensor operations or FP64 compute, the article is unclear. If these metrics are really FP64 compute, then the TPU is faster than any current GPU available, which I suspect isn’t the case, give the low power consumption. If those numbers refer to tensor ops, then the TPU has only ~ 1/3rd the performance of latest generation GPUs like the recently announced Nvidia Volta.

Then I came across this very interesting and more detailed post from Nvidia’s CEO, Jensen Huang, that directly compared Nvidia’s latest Volta processor with Google’s Tensor Processing Unit. Due to the non-specificity to what the teraflops metrics stand for, the Wired article felt like a public relations bit, even if it is from fairly well established publication like Wired. Nvidia’s blog post puts into better context the performance metrics’ than a technology journalist’s article. I wish, there was a little bit more of specificity and context to some of the benchmarks that Wired cites, instead of just copy-pasting the marketing bit passed on to them by Google. From a market standpoint, I still think, TPUs are a bargaining chip that Google can wave at GPU vendors like AMD and Nvidia to bring the prices of their workstations GPUs down. I also think, Google isn’t really serious in building the processor. Like most Google projects, they want to invest the least amount of money to get the maximum profit. Therefore, the chip won’t be having any IP related to really fast FP64 compute.

Low power, many core processors have been under development for many years. The Sunway processor from China, is built on the similar philosophy, but optimized for FP64 compute. Outside of one supercomputer center, I don’t know any developer group working on Sunway. Another recent example in the US is Intel trying it with their Knights Bridge and Knights Landing range of products and landed right on their face. I firmly believe, Google is on the wrong side of history. It will be really hard to gain dev-op traction, especially for custom built hardware.

Let us see how this evolves, whether it is just the usual Valley hype or something real. I like the Facebook engineer’s quote in the Wired article. I am firmly on the consumer GPU side of things. If history is a teacher, custom co-processors that are hard to program never really succeeded to gain market or customer traction. A great example is Silicon Graphics (SGI). They were once at the bleeding edge of high performance computational tools and then lost their market to commodity hardware that became faster and cheaper than SGIs custom built machines.

More interests in making artificial intelligence run faster is always good news, and this is very exciting for AI applications in enterprise. But, I have another problem. Google has no official plans to market the TPU. For a company like ours, at moad, we rely on companies like Nvidia developing cutting edge hardware and letting us integrate it into a coherent marketable product. In Google’s case, the most likely scenario is: Google will deploy TPU only for their cloud platform. In a couple of years, the hardware evolution will make it faster or as fast as any other product in the market, making their cloud servers a default monopoly. I have problem with this model. Not only will these developments keep independent developers from leveraging the benefits of AI, but also shrink the market place significantly.

I only wish, Google published clear cut plans to market their TPU devices to third party system integrators and data center operators like us, so that the AI revolution will be more accessible and democratized, jut like what Microsoft did with the PC revolution in the 90s.

(Image captions from top to botton: 1) Image header of our store front for the machine learning dev-op server, 2) Tensor processing unit version 2, obtained from Google TPU2 blog post, 3) Image of Nvidia Tesla Volta V100 GPU, obtained from wccftech, 4) Image of Taihu Light supercomputer powered by Sunway microprocessors, from Top500, 5) An advertisement for Silicon Graphics 02 Visual Workstation, obtained from public domain via Pinterest, 6) Gerty, the artificially intelligent robot in Duncan Jones directed movie: The Moon (2009), obtained from public domain via Pinterest, 7) A meme about the marketing department, obtained via public domain from the blog ‘A small orange’.)